반응형

texts="""나라의 말이 중국과 달라

한자와는 서로 통하지 아니하여서

이런 까닭으로 어리석은 백성이

말하고자 하는 바가 있어도

마침내 제 뜻을 능히 펴지

못하는 사람이 많다

내가 이를 위하여 가엾게 여겨

새로 스물여덟 자를 만드니

사람마다 하여금 쉽게 익혀 날마다 씀에

편안케 하고자 할 따름이다"""

texts=texts.split('\n')

display(texts)

['나라의 말이 중국과 달라 ',

'한자와는 서로 통하지 아니하여서 ',

'이런 까닭으로 어리석은 백성이 ',

'말하고자 하는 바가 있어도 ',

'마침내 제 뜻을 능히 펴지',

'못하는 사람이 많다',

'내가 이를 위하여 가엾게 여겨 ',

'새로 스물여덟 자를 만드니',

'사람마다 하여금 쉽게 익혀 날마다 씀에 ',

'편안케 하고자 할 따름이다']

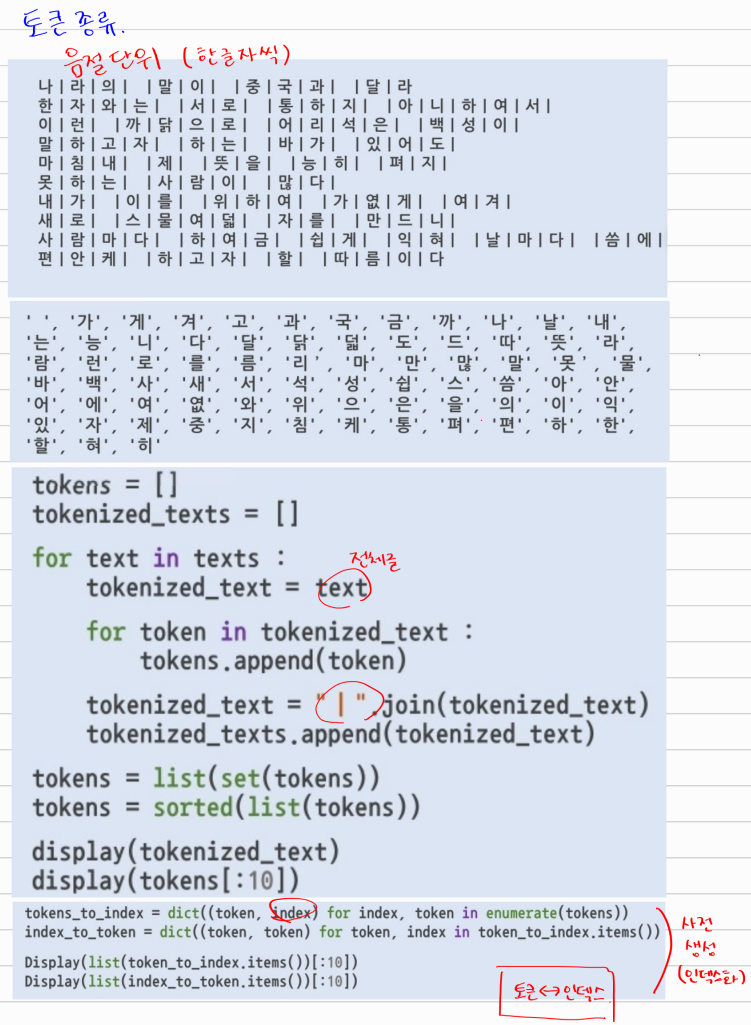

음절단위

tokens = []

tokenized_texts = []

for text in texts :

tokenized_text = text

for token in tokenized_text :

tokens.append(token)

tokenized_text = "|".join(tokenized_text)

tokenized_texts.append(tokenized_text)

tokens = list(set(tokens))

tokens = sorted(list(tokens))

display(tokenized_text)

display(tokens[:10])

'편|안|케| |하|고|자| |할| |따|름|이|다'[' ', '가', '게', '겨', '고', '과', '국', '금', '까', '나']token_to_index = dict((token, index) for index, token in enumerate(tokens))

index_to_token = dict((index, token) for token, index in token_to_index.items())

display(list(token_to_index.items())[:10])

display(list(index_to_token.items())[:10])

[(' ', 0),

('가', 1),

('게', 2),

('겨', 3),

('고', 4),

('과', 5),

('국', 6),

('금', 7),

('까', 8),

('나', 9)][(0, ' '),

(1, '가'),

(2, '게'),

(3, '겨'),

(4, '고'),

(5, '과'),

(6, '국'),

(7, '금'),

(8, '까'),

(9, '나')]

단어단위

tokenw = []

Tokenized_texts = []

for text in texts :

tokenized_text = text.split()

for token in tokenized_text :

tokenw.append(token)

tokenized_text = "|".join(tokenized_text)

tokenized_texts.append(tokenized_text)

tokenw = list(set(tokenw))

tokenw = sorted(list(tokenw))

display(tokenized_texts)

display(tokenw[:10])

['나|라|의| |말|이| |중|국|과| |달|라| ',

'한|자|와|는| |서|로| |통|하|지| |아|니|하|여|서| ',

'이|런| |까|닭|으|로| |어|리|석|은| |백|성|이| ',

'말|하|고|자| |하|는| |바|가| |있|어|도| ',

'마|침|내| |제| |뜻|을| |능|히| |펴|지',

'못|하|는| |사|람|이| |많|다',

'내|가| |이|를| |위|하|여| |가|엾|게| |여|겨| ',

'새|로| |스|물|여|덟| |자|를| |만|드|니',

'사|람|마|다| |하|여|금| |쉽|게| |익|혀| |날|마|다| |씀|에| ',

'편|안|케| |하|고|자| |할| |따|름|이|다',

'나라의|말이|중국과|달라',

'한자와는|서로|통하지|아니하여서',

'이런|까닭으로|어리석은|백성이',

'말하고자|하는|바가|있어도',

'마침내|제|뜻을|능히|펴지',

'못하는|사람이|많다',

'내가|이를|위하여|가엾게|여겨',

'새로|스물여덟|자를|만드니',

'사람마다|하여금|쉽게|익혀|날마다|씀에',

'편안케|하고자|할|따름이다']['가엾게', '까닭으로', '나라의', '날마다', '내가', '능히', '달라', '따름이다', '뜻을', '마침내']tokens_to_index = dict((token1, index) for index, token1 in enumerate(tokenw))

index_to_token = dict((index, token1) for token1, index in tokens_to_index.items())

display(list(tokens_to_index.items())[:10])

display(list(index_to_token.items())[:10])

[('가엾게', 0),

('까닭으로', 1),

('나라의', 2),

('날마다', 3),

('내가', 4),

('능히', 5),

('달라', 6),

('따름이다', 7),

('뜻을', 8),

('마침내', 9)][(0, '가엾게'),

(1, '까닭으로'),

(2, '나라의'),

(3, '날마다'),

(4, '내가'),

(5, '능히'),

(6, '달라'),

(7, '따름이다'),

(8, '뜻을'),

(9, '마침내')]

형태소단위

!pip install konlpy

Defaulting to user installation because normal site-packages is not writeableWARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution - (c:\users\yimst\appdata\roaming\python\python39\site-packages)

WARNING: Ignoring invalid distribution -ensorflow-gpu (c:\users\yimst\appdata\roaming\python\python39\site-packages)

Collecting konlpy

Downloading konlpy-0.6.0-py2.py3-none-any.whl (19.4 MB)

--------------------------------------- 19.4/19.4 MB 50.4 MB/s eta 0:00:00

Collecting JPype1>=0.7.0

Downloading JPype1-1.4.1-cp39-cp39-win_amd64.whl (345 kB)

---------------------------------------- 345.2/345.2 kB ? eta 0:00:00

Requirement already satisfied: numpy>=1.6 in c:\programdata\anaconda3\lib\site-packages (from konlpy) (1.21.5)

Requirement already satisfied: lxml>=4.1.0 in c:\programdata\anaconda3\lib\site-packages (from konlpy) (4.9.1)

Requirement already satisfied: packaging in c:\programdata\anaconda3\lib\site-packages (from JPype1>=0.7.0->konlpy) (21.3)

Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in c:\programdata\anaconda3\lib\site-packages (from packaging->JPype1>=0.7.0->konlpy) (3.0.9)

Installing collected packages: JPype1, konlpy

Successfully installed JPype1-1.4.1 konlpy-0.6.0

from konlpy.tag import Okt

tokenizer = Okt()

tokens = []

tokenizeds_text = []

for text in texts :

tokenized_text = tokenizer.morphs(text)

for token in tokenized_text :

tokens.append(token)

tokenized_text = "|".join(tokenized_text)

tokenizeds_text.append(tokenized_text)

tokens = list(set(tokens))

tokens = sorted(list(tokens))

display(tokens[:10])

tokens_to_index =dict((token1, index) for index, token1 in enumerate(tokens))

display(list(tokens_to_index.items())[:10])

index_to_token = dict((index, token1) for token1, index in tokens_to_index.items())

display(list(index_to_token.items())[:10])

['가', '가엾게', '과', '까닭', '나라', '날', '내', '능', '달라', '따름'][('가', 0),

('가엾게', 1),

('과', 2),

('까닭', 3),

('나라', 4),

('날', 5),

('내', 6),

('능', 7),

('달라', 8),

('따름', 9)][(0, '가'),

(1, '가엾게'),

(2, '과'),

(3, '까닭'),

(4, '나라'),

(5, '날'),

(6, '내'),

(7, '능'),

(8, '달라'),

(9, '따름')]

피처벡터화

원핫인코딩

tokens = ['한글', '딥러닝','케라스','세종대왕','광화문','자연어처리']

token_to_index = dict((token,index) for index, token in enumerate(tokens))

display(token_to_index)

import numpy as np

for token in tokens :

token_onehot = np.zeros((len(tokens)), dtype = 'float32')

token_onehot[token_to_index[token]] = 1

print(token + '\t' + str(token_onehot))

{'한글': 0, '딥러닝': 1, '케라스': 2, '세종대왕': 3, '광화문': 4, '자연어처리': 5}한글 [1. 0. 0. 0. 0. 0.]

딥러닝 [0. 1. 0. 0. 0. 0.]

케라스 [0. 0. 1. 0. 0. 0.]

세종대왕 [0. 0. 0. 1. 0. 0.]

광화문 [0. 0. 0. 0. 1. 0.]

자연어처리 [0. 0. 0. 0. 0. 1.]

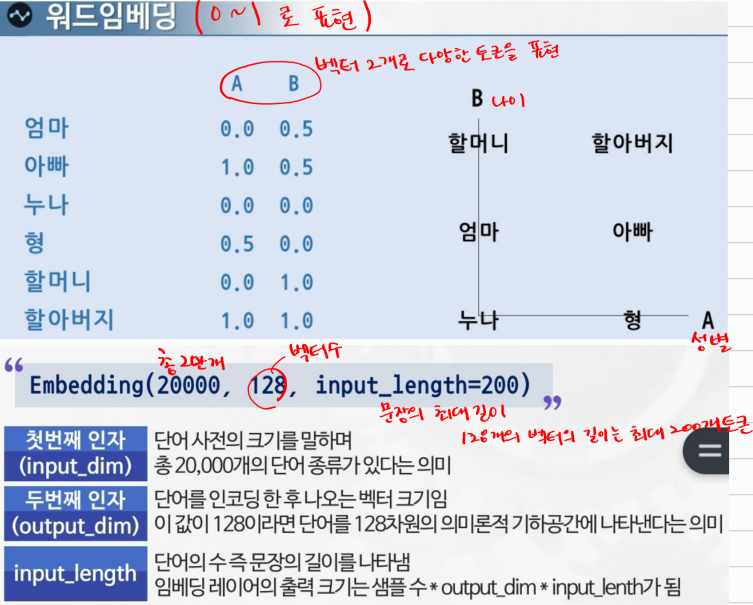

input_length 는 한 번에 학습하고자 하는 문장의 길이를 의미하게 된다. 이 경우, 다음에 플래튼 레이어가 오게 되면 반드시 input_length를 명시 해주어야 한다.

'파이썬 > Tensorflow,Pytorch' 카테고리의 다른 글

| AttributeError: partially initialized module 'torch' has no attribute 'cuda' (most likely due to a circular import) (0) | 2022.12.18 |

|---|---|

| No module named ‘torchtext.legacy’, 'torchtext.data' (0) | 2022.12.18 |

| 레고로 이해하는 LSTM, RNN (0) | 2022.12.16 |

| 케라스 Keras 공식문서 한글 번역본 (0) | 2022.12.16 |

| 레고로 설명하는 CNN 이미지 숫자 분류 시각화 (0) | 2022.12.15 |