반응형

각 프레임마다 onnx모델 Run을 하여 bbox 좌표를 얻습니다.

onnx모델의 입력값은 정규화,표준화된 픽셀값이며 크기는 [1,3,640,640] 형식은 텐서입니다.

- https://github.com/pykeio/ort/blob/a92e4d1e6d99674dc1d04ed22743c3dd1bc59186/tests/onnx.rs#L16

- https://dev.to/andreygermanov/how-to-create-yolov8-based-object-detection-web-service-using-python-julia-nodejs-javascript-go-and-rust-4o8e#rust

use std::{sync::Arc,

path::PathBuf,

any::type_name,

time::SystemTime,

};

use image::{imageops::{FilterType,resize},

RgbImage, Rgb

};

use ndarray::{Array, IxDyn, s, Axis,Array3};

use video_rs::{self, Decoder,Locator,Encoder, EncoderSettings, Time};

use ort::{SessionBuilder,

environment::Environment,

tensor::InputTensor, Session,

};

use imageproc::{drawing::draw_hollow_rect,

rect::Rect

};

// Array of YOLOv8 class labels

const YOLO_CLASSES:[&str;80] = [

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat",

"traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse",

"sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie",

"suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove",

"skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon",

"bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut",

"cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse",

"remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book",

"clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush"

];

fn detect_objects_on_image(model: &Session, buf: RgbImage) -> Vec<(f32,f32,f32,f32,&'static str,f32)> {

let (input,img_width,img_height) = prepare_input(buf);

let output = run_model(&model,input);

return process_output(output, img_width, img_height);

}

fn prepare_input(buf: RgbImage) -> (Array<f32,IxDyn>, u32, u32) {

let resizeimg = resize(img,640,640,FilterType::Nearest);

let mut input = Array::zeros((1, 3, 640, 640)).into_dyn();

for pixel in resizeimg.enumerate_pixels() {

let x = pixel.0 as usize;

let y = pixel.1 as usize;

let [r,g,b] = pixel.2.0;

input[[0, 0, y, x]] = (r as f32) / 255.0;

input[[0, 1, y, x]] = (g as f32) / 255.0;

input[[0, 2, y, x]] = (b as f32) / 255.0;

};

return (input, img_width, img_height);

}

fn run_model(model:&Session, input:Array<f32,IxDyn>) -> Array<f32,IxDyn> {

let input = InputTensor::FloatTensor(input);

let outputs = model.run([input]).unwrap();

let output = outputs.get(0).unwrap().try_extract::<f32>().unwrap().view().t().into_owned();

return output;

}

fn process_output(output:Array<f32,IxDyn>,img_width: u32, img_height: u32) -> Ve

let mut boxes = Vec::new();

let output = output.slice(s![..,..,0]);

for row in output.axis_iter(Axis(0)) {

let row:Vec<_> = row.iter().map(|x| *x).collect();

let (class_id, prob) = row.iter().skip(4).enumerate()

.map(|(index,value)| (index,*value))

.reduce(|accum, row| if row.1>accum.1 { row } else {accum}).unwrap();

if class_id != 0 {

continue

}

if prob < 0.5 {

continue

}

let label = YOLO_CLASSES[class_id];

let xc = row[0]/640.0*(img_width as f32);

let yc = row[1]/640.0*(img_height as f32);

let w = row[2]/640.0*(img_width as f32);

let h = row[3]/640.0*(img_height as f32);

let x1 = xc - w/2.0;

let x2 = xc + w/2.0;

let y1 = yc - h/2.0;

let y2 = yc + h/2.0;

boxes.push((x1,y1,x2,y2,label,prob));

}

boxes.sort_by(|box1,box2| box2.5.total_cmp(&box1.5));

let mut result = Vec::new();

while boxes.len()>0 {

result.push(boxes[0]);

boxes = boxes.iter().filter(|box1| iou(&boxes[0],box1) < 0.7).map(|x| *x).collect()

}

return result;

}

fn array_to_image(arr: Array3<u8>) -> RgbImage {

let raw = arr.into_raw_vec();

(RgbImage::from_raw(width as u32, height as u32, raw))

.expect("container should have the right size for the image dimensions")

}

fn image_to_array(buf:RgbImage)->Array3<u8>{

let img = &buf;

fn image_to_array(buf:RgbImage)->Array3<u8>{

return input;

}

fn main(){

let env = Arc::new(Environment::builder().with_name("YOLOv8").build().unwrap());

let model = SessionBuilder::new(&env).unwrap().with_model_from_file("yolov8n.onnx").unwrap();

video_rs::init().unwrap();

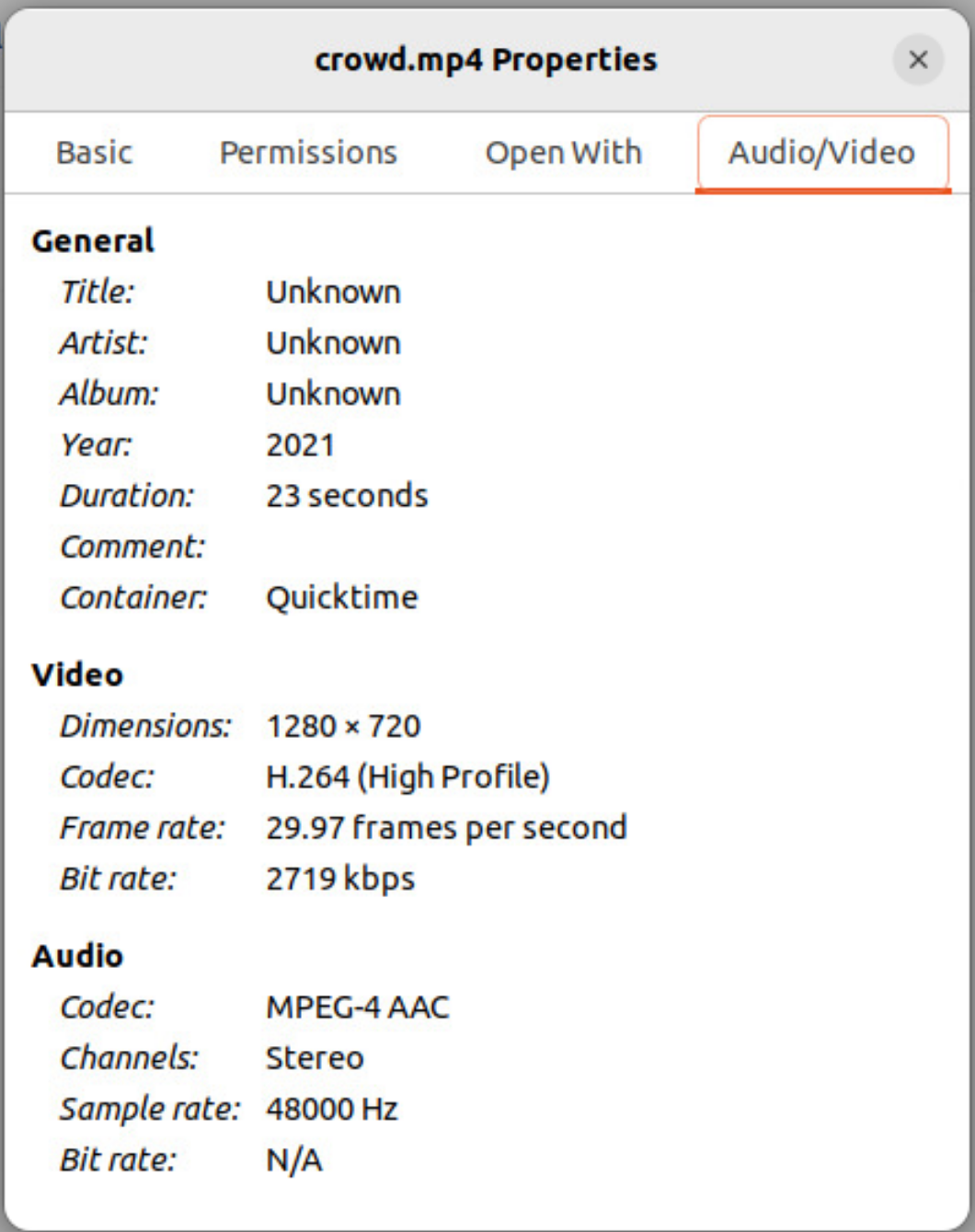

let mut decoder = Decoder::new(&PathBuf::from("/home/yimstar9/github/ObjectTracking/data/crowd.mp4").into()).unwrap();

let mut count = 0;

fn main(){

//시간체크용 타이머

let start = SystemTime::now();

for frame in decoder.decode_iter() {

if let Ok((_, frame)) = frame {

//frame(array)를 RgbImage형식으로 변환

let mut image = array_to_image(frame);

//객체 box 좌표 -> boxes

// let detect_start = SystemTime::now();

let boxes = detect_objects_on_image(&model,image.clone());

// let detect_end = SystemTime::now();

// let detect_time = detect_end.duration_since(detect_start);

// println!("detect run time: {:?}", detect_time);

//image에 box좌표로 사각형 그림

for bbox in boxes.iter(){

let rect = Rect::at(bbox.0 as i32, bbox.1 as i32).of_size((bbox.2-bbox.0) as u32,(bbox.3 - bbox.1) as u32 );

image = draw_hollow_rect(&image, rect, Rgb([255, 0, 0]));

}

//인코딩용 프레임 변환

let frm= image_to_array(image);

//인코딩

encoder

.encode(&frm,&position)

.expect("failed to encode frame");

position = position.aligned_with(&duration).add();

} else {

break;

}

count = count +1;

println!("{}",count);

}

//시간체크용 타이머

let end = SystemTime::now();

let elapsed_time = end.duration_since(start);

println!("Elapsed time: {:?}", elapsed_time);

}

'Rust' 카테고리의 다른 글

| [Rust] 주석 (107) | 2023.07.13 |

|---|---|

| [Rust] model inference with CUDA (ONNXRUNTIME,ort) (61) | 2023.07.07 |

| [OpenCV] DNN failing to load an ONNX file: computeShapeByReshapeMask (66) | 2023.07.05 |

| [RUST]OpenCV : 'limits' file not found (26) | 2023.07.05 |

| [Rust] 동영상에 Box 그리기 (21) | 2023.07.05 |